AR Digital Fabrication

Projected AR Research at Google Robotics

Google, 2015

From 2013 to 2015 I extensively researched AR, VR, and projection interfaces as tools for collaborating with robots, 3D modeling, and digital fabrication.

Unfortunately, documentation from this period is limited, but the following are a subset of experiments specifically focused projected AR.

I also explored screen-based AR as a tool for robot path planning, and using compliant force-feedback robot arms as haptic input devices for VR modeling simulations.

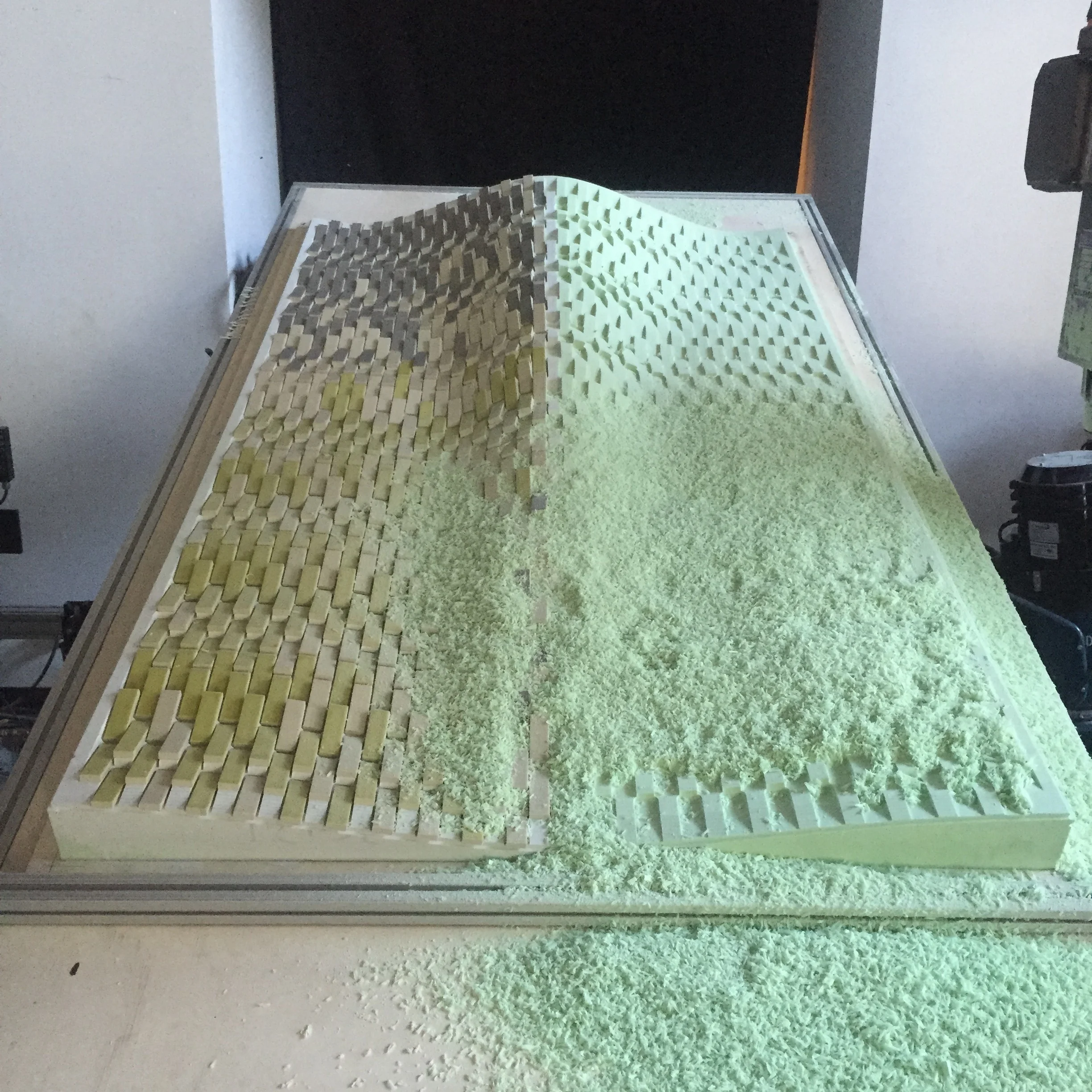

Shape AR

A projected guide for subtractive fabrication, Shape AR utilized perspective dependent rendering of a 3D model within a solid block of foam.

The system projected a heat map indicating where material needed to be removed or added to achieve the desired form. This example shows head tracking and perspective corrected rendering of a surfboard model, giving the user "X-Ray" vision of their final piece.

Users could also manipulate pucks on the work surface that would move the bezier handles defining the object's shape.

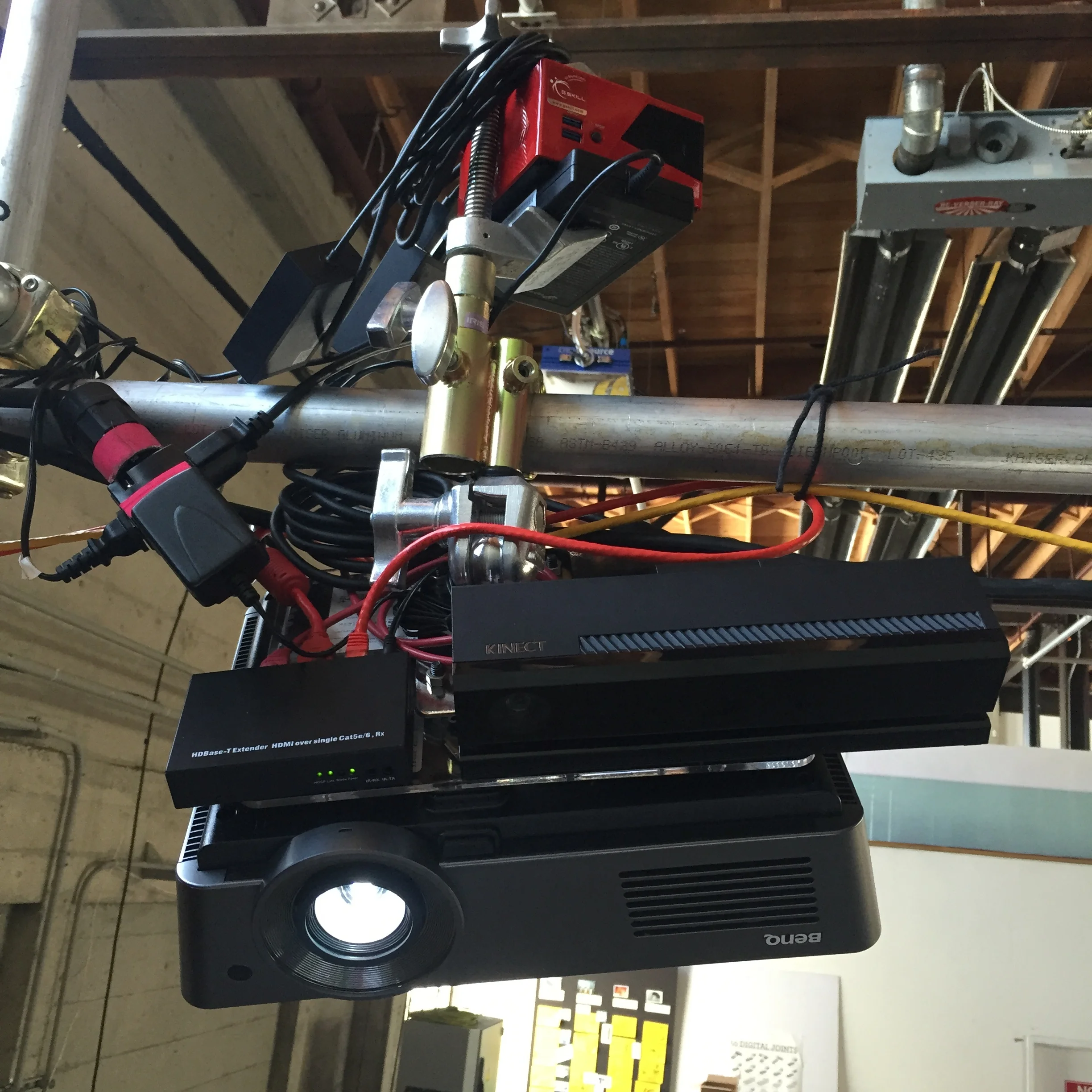

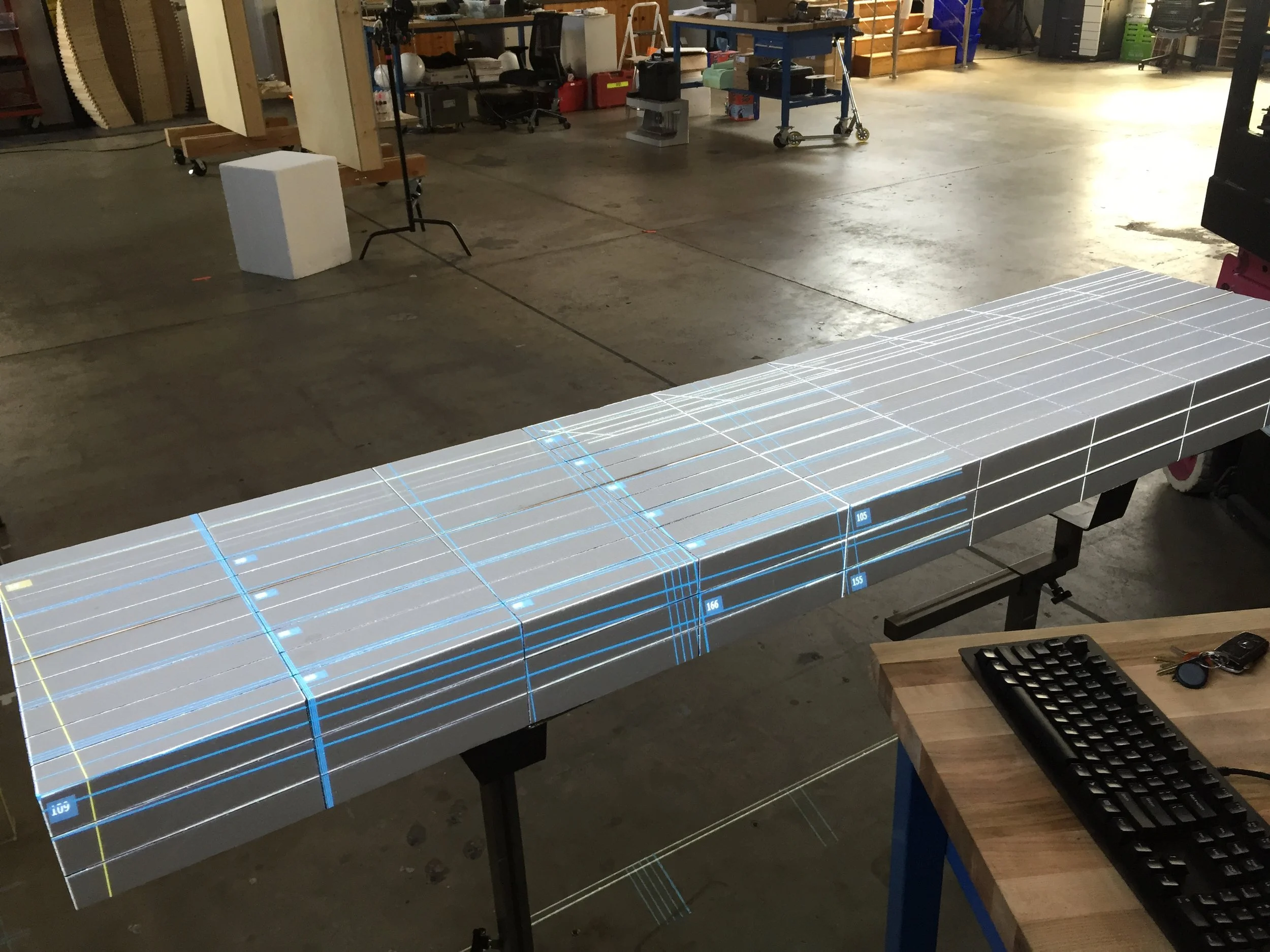

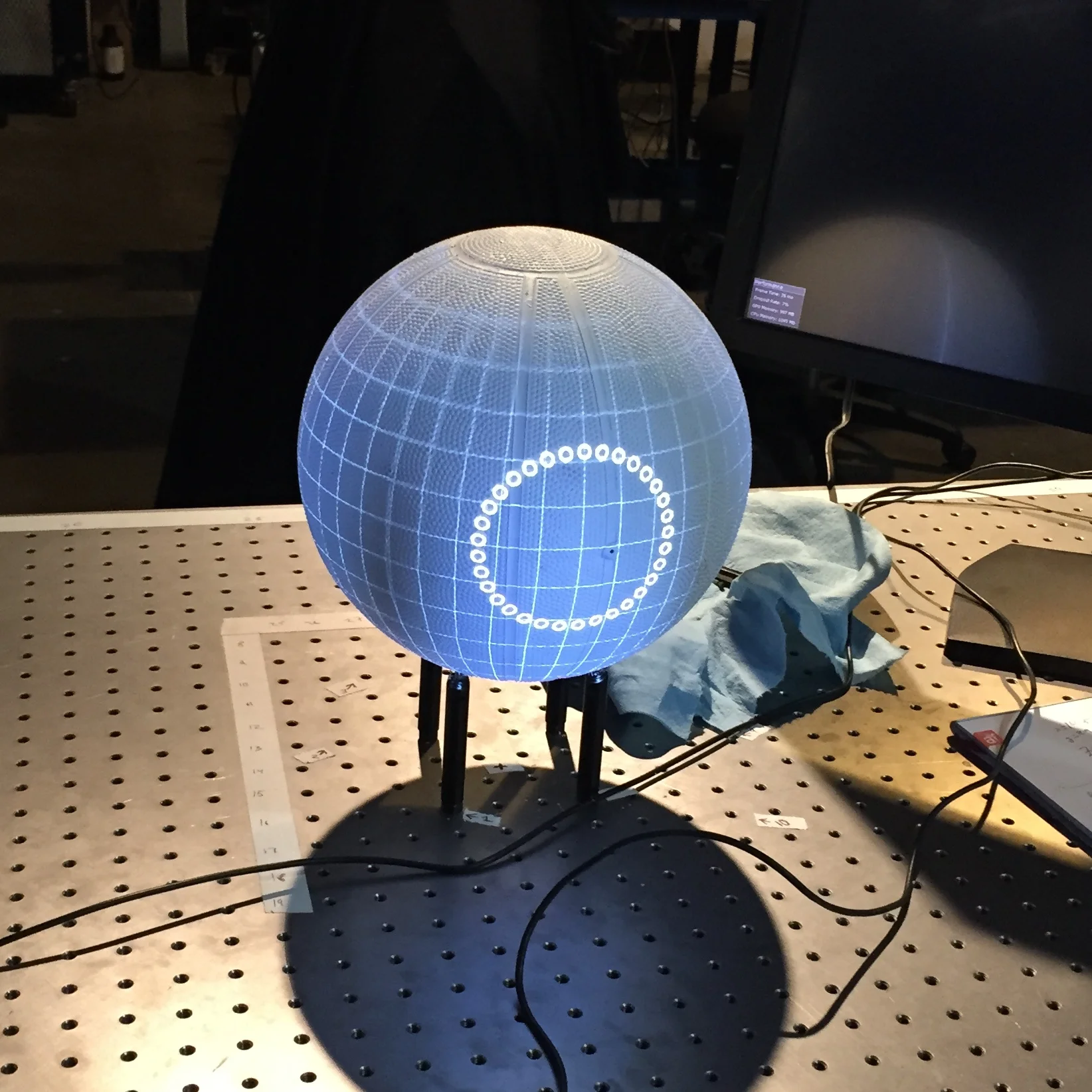

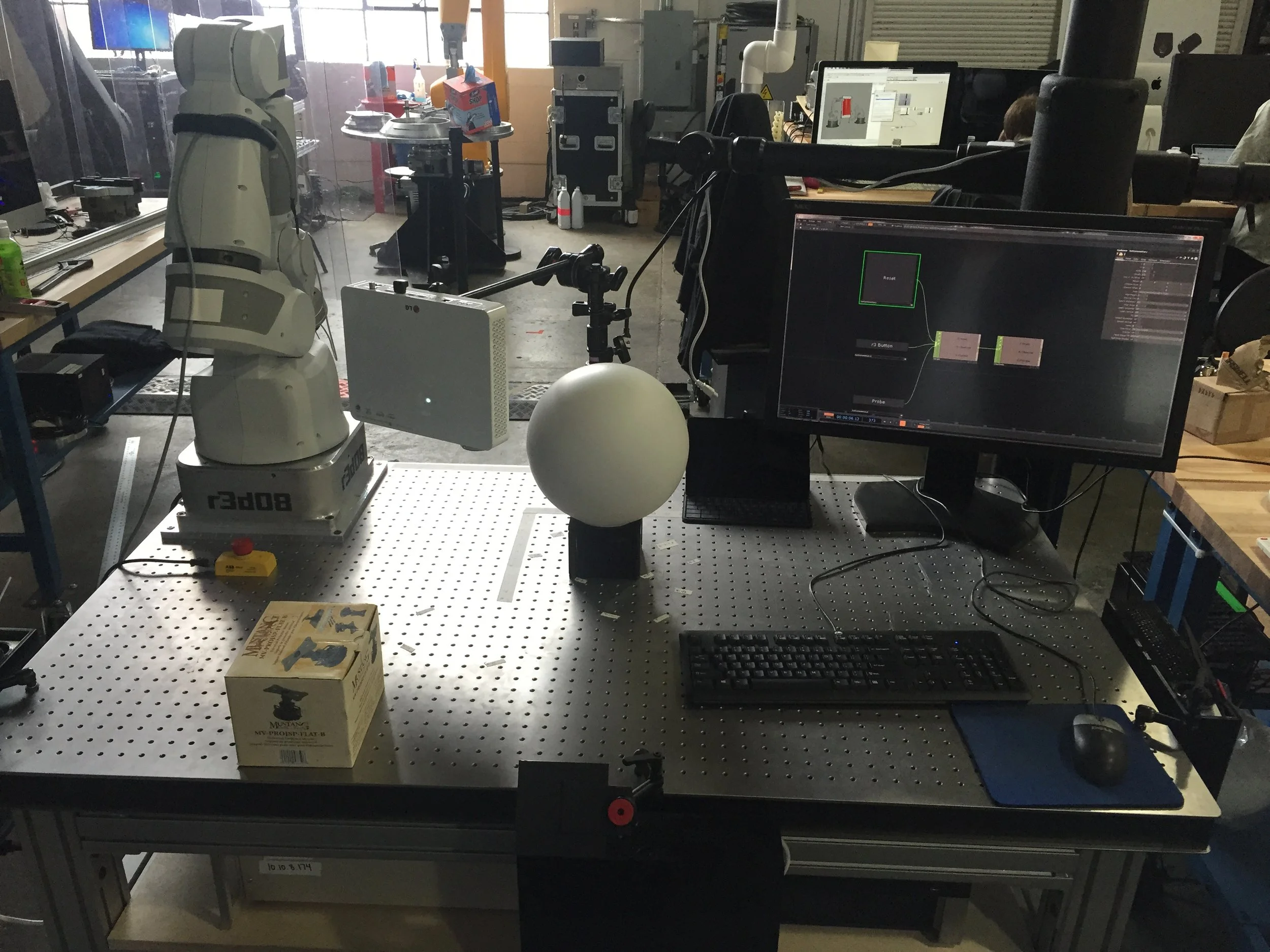

Light Table

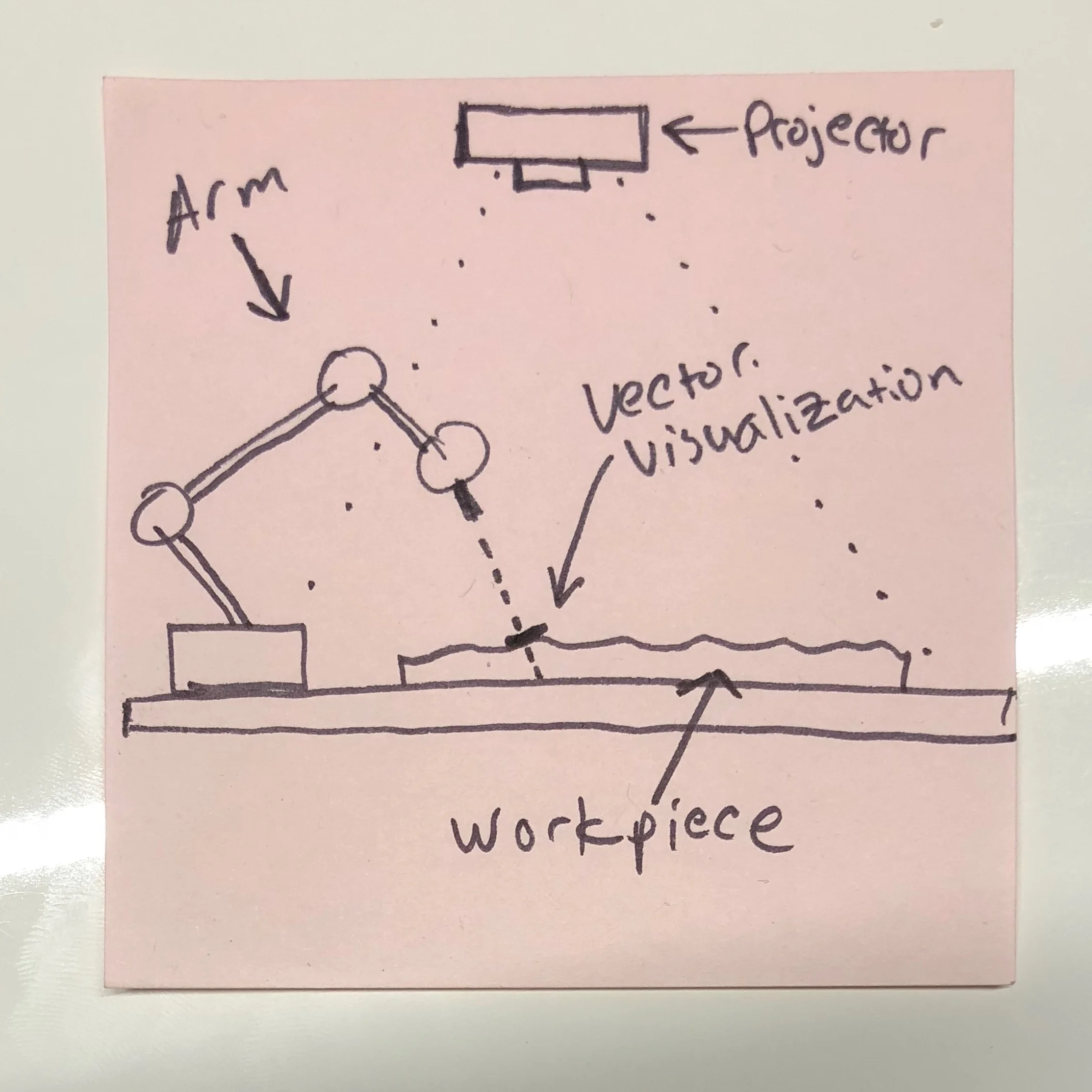

Light Table was an interactive work surface that allowed users to visualize vectors, hole patterns, and milling paths that would be performed by a compliant robotic arm.

When the arm was manipulated by hand it acted as an input device, so projection would continue to update in real time based on the robot's position.

Concept sketch

Manipulating the arm

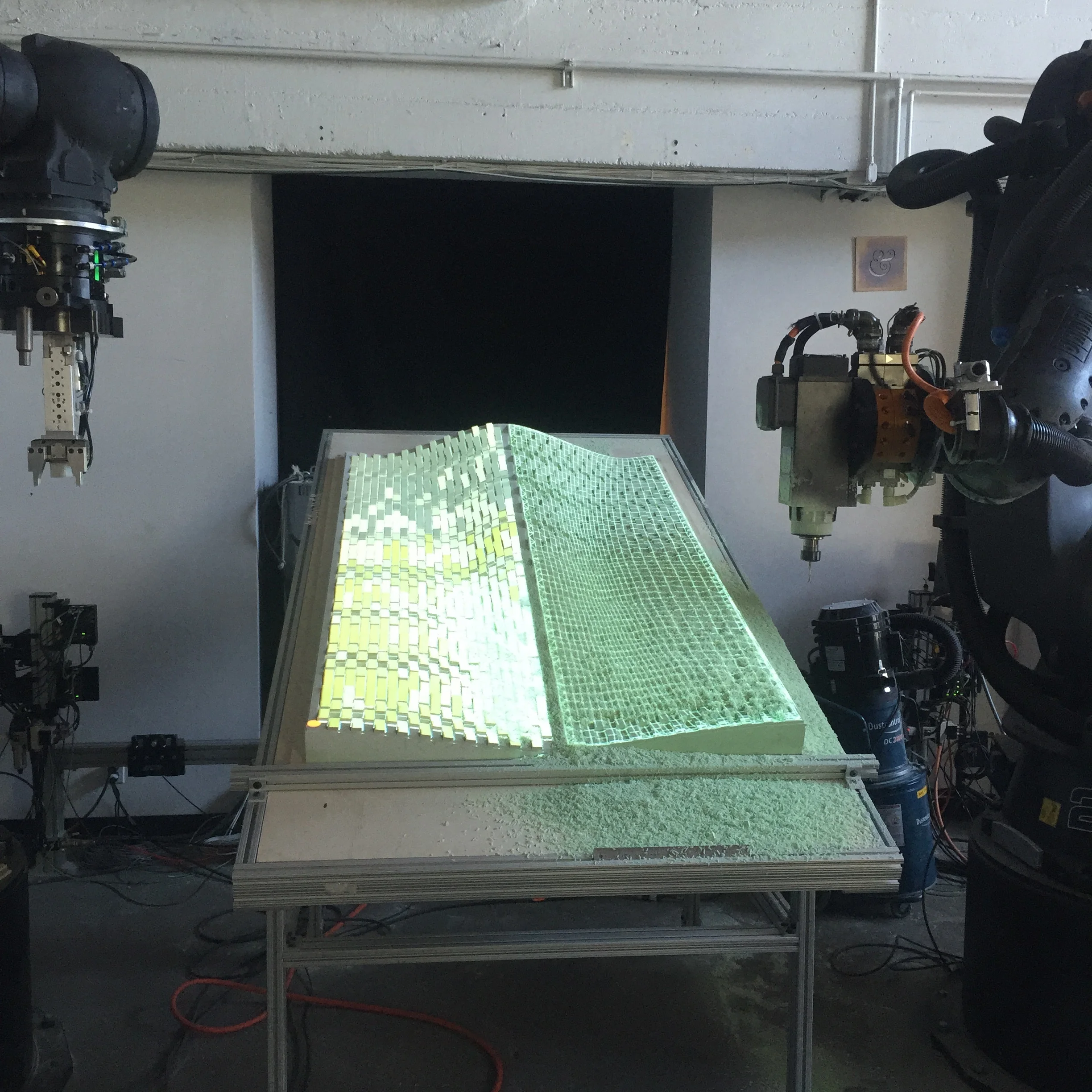

Collaborative Fabrication

A decorative panel is created with multi-step fabrication process involving milling pockets and adhering tiles.

Projection is calibrated with the work surface to visualize the robot's upcoming steps.

This allows a human to safely be in the loop for spot QA checking, dust removal, and maintenance without needing to pause the robots.